The Impact of Social Media Algorithms on Youth Mental Health

A Call for Responsible Practices and Government Intervention

In an era dominated by social media, where platforms like Facebook, Twitter, and Instagram wield immense influence, the rise of algorithm-driven content recommendation systems has brought to light a concerning issue - the effect of these algorithms on the mental health of youth and young people. Social media algorithms are designed with the intention to maximize user engagement, keeping users glued to their screens. However, this has led to a paradoxical situation where these algorithms, while boosting platform interaction, are also inadvertently contributing to a surge in mental health issues among young users. The tailored content provided by algorithms tends to create echo chambers, intensifying feelings of loneliness, inadequacy, and anxiety.

Effects on Mental Health:

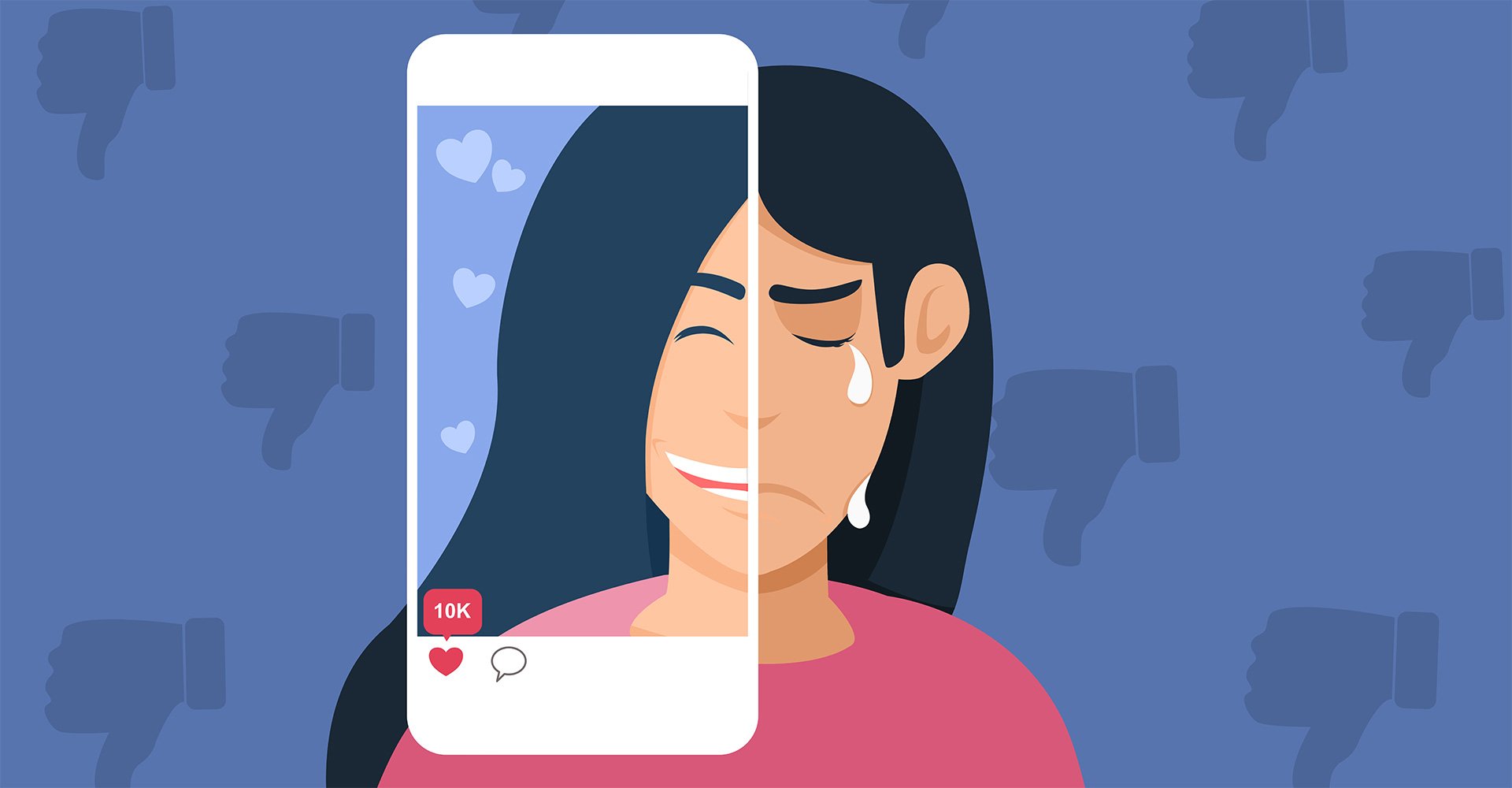

1. Social Comparison and Self-Esteem:

Algorithm-driven content often portrays an idealized and curated version of others' lives, leading to a constant sense of social comparison among users. This perpetual exposure to seemingly perfect lifestyles can contribute to a negative impact on individuals' self-esteem. As people continually measure themselves against these unrealistic standards, feelings of inadequacy and self-doubt can emerge, potentially fueling mental health issues.

2. Information Bubbles and Polarization:

Algorithms have a tendency to tailor content based on users' preferences, inadvertently creating information bubbles that reinforce existing beliefs and perspectives. While this can offer personalized experiences, it also leads to a concerning consequence – the reinforcement of ideological divisions. By limiting exposure to diverse viewpoints, algorithmic content consumption can erode critical thinking and intellectual open-mindedness, thereby contributing to societal polarization and hindered discourse.

3. Addictive Behavior and Fear of Missing Out (FOMO):

The real-time and personalized nature of algorithm-driven content plays into users' inherent psychological tendencies, triggering addictive behaviors. The constant need to check for updates and new content can lead to compulsive usage patterns, where individuals become reliant on their devices. This behavior, coupled with the Fear of Missing out (FOMO), generates heightened levels of anxiety and a sense of being left out, both of which can take a toll on mental well-being.

4. Cyberbullying Amplification:

Algorithms, while designed to engage users, can unintentionally amplify harmful content and cyberbullying. The rapid spread of negative or hurtful material in online environments can create a hostile atmosphere. Algorithmic content distribution can magnify the reach of such damaging content, intensifying the impact on victims and potentially causing severe emotional distress. This amplification of cyberbullying incidents contributes to an unsafe digital space, adversely affecting mental health.

Mitigating Algorithmic Impact:

1. User Empowerment:

In the effort to mitigate the potential negative impact of algorithm-driven content, it is essential for online platforms to prioritize user empowerment. This can be achieved by offering users a high degree of control over the content they encounter. By implementing granular customization options and content filtering tools, platforms allow individuals to curate their digital experiences according to their preferences and values. This shift towards user-centric control empowers individuals to shape their online interactions and reduces the likelihood of being exposed to content that may adversely affect their mental health.

2. Algorithm Transparency:

Transparency surrounding algorithmic processes is a crucial step towards minimizing their impact on mental well-being. Companies should strive to provide users with clear and comprehensible insights into how algorithms function. This transparency not only builds trust between users and platforms but also enables users to understand the mechanisms behind content recommendations. When users are aware of why certain content is being presented to them, they can make more informed decisions about their online engagement, thereby diminishing potential feelings of manipulation or uncertainty.

3. Promote Diverse Content:

To counteract the formation of information bubbles and polarization, promoting diverse content distribution through algorithms is imperative. Online platforms should actively encourage exposure to a wide range of perspectives and viewpoints. By deliberately incorporating content that challenges existing beliefs and introduces alternative narratives, algorithms can help users develop a more comprehensive understanding of complex issues. This approach fosters critical thinking, intellectual growth, and open dialogue, ultimately contributing to a healthier online environment that supports mental well-being.

Corporate Responsibility:

1. Ethical Algorithm Design:

Technology companies bear the responsibility of prioritizing user well-being when designing algorithms. By integrating ethical considerations into algorithmic development, companies can create safeguards that minimize negative emotional impacts on users. This involves carefully assessing how content is presented and ensuring that the algorithmic processes prioritize mental health and emotional safety.

2. User Feedback and Well-being Metrics:

Regularly gathering user feedback and data is crucial for assessing the mental health impact of algorithms. By actively seeking input from users and analyzing well-being metrics, companies can gain insights into how their algorithms influence user experiences. This data-driven approach enables iterative improvements that align with users' mental well-being needs, fostering a more responsible and user-centric digital environment.

3. Content Moderation and Intervention:

To uphold corporate responsibility, technology companies must implement robust content moderation measures. By employing advanced tools and human oversight, platforms can identify and address harmful content before it reaches users. Timely intervention not only prevents the amplification of negative experiences but also contributes to cultivating a safe and supportive online space that promotes mental health.

Government's Vital Role:

1. Digital Literacy Education:

Governments play a vital role in promoting responsible online behavior and mental health among the younger generation. By integrating digital literacy and mental health education into school curricula, they empower students with the necessary skills to navigate the digital landscape safely. Equipping youth with critical thinking abilities and emotional resilience helps them make informed choices and develop a healthier relationship with technology.

2. Public Awareness Campaigns:

Initiating public awareness campaigns is essential to educate both young users and parents about the potential mental health risks associated with excessive social media use. By raising awareness about the impact of algorithms, content consumption, and digital engagement on mental well-being, governments can foster a culture of informed decision-making and responsible online habits.

3. Regulation and Oversight:

Governments hold the responsibility of ensuring the ethical use of algorithms and safeguarding user well-being. To achieve this, they should establish clear and comprehensive guidelines for ethical algorithm design. Additionally, regulatory frameworks can be put in place to hold technology companies accountable for their impact on mental health. By enforcing these regulations, governments encourage responsible algorithmic practices that prioritize user mental well-being.

As social media algorithms continue to shape the digital landscape, the undeniable impact on youth mental health demands immediate attention and action. Technology companies, exemplified by Meta, Twitter, and their counterparts, hold the responsibility of redefining algorithmic practices to prioritize user well-being. Concurrently, governments must step up, assuming a pivotal role in shaping mental health education and safeguarding the mental well-being of the younger generation. The synthesis of responsible algorithmic design, user empowerment, and comprehensive mental health education will be instrumental in mitigating the adverse effects of social media algorithms on the mental health of youths and young people.

(The author is a Nepali journalist and International strategic affairs analyst who covers topics pertaining to International Affairs, Nepali politics, society, IT & Technology.)

Leave Comment